最近在训练Yolov8-Pose时遇到一个问题,就是如何将自己使用labelme标注的Json文件转化成可用于Yolov8-Pose训练的txt文件。

具体代码有以下:

1、labelme2coco.py 将自己标注的人体姿态信息json文件格式合并转换成CoCo格式

import os import json import numpy as np import glob import shutil np.random.seed(41) import cv2 #0为背景 classname_to_id = {"person": 1} class Lableme2CoCo: def __init__(self, splitDir=''): self.images = [] self.annotations = [] self.categories = [] self.img_id = 0 self.ann_id = 0 self.splitDir = splitDir def save_coco_json(self, instance, save_path): json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1) # indent=2 更加美观显示 # 由json文件构建COCO def to_coco(self, json_path_list): self._init_categories() for json_path in json_path_list: # print(type(json_path)) obj = self.read_jsonfile(json_path) self.images.append(self._image(obj, json_path)) shapes = obj['shapes'] groupIds = [] for shape in shapes: groupId = shape['group_id'] groupIds.append(groupId) for i in set(groupIds): keyPoints = [0] * 51 keyPointNum = 0 bbox = [] for shape in shapes: if i != shape['group_id']: continue if shape['shape_type'] == "point": labelNum = int(shape['label']) keyPoints[labelNum * 3 + 0] = int(shape['points'][0][0] + 0.5) keyPoints[labelNum * 3 + 1] = int(shape['points'][0][1] + 0.5) keyPoints[labelNum * 3 + 2] = 2 keyPointNum += 1 if shape['shape_type'] == 'rectangle': x0, y0, x1, y1 = shape['points'][0][0], shape['points'][0][1], \ shape['points'][1][0], shape['points'][1][1] xmin = min(x0, x1) ymin = min(y0, y1) xmax = max(x0, x1) ymax = max(y0, y1) bbox = [xmin, ymin, xmax - xmin, ymax - ymin] annotation = self._annotation(bbox, keyPoints, keyPointNum) self.annotations.append(annotation) self.ann_id += 1 self.img_id += 1 # for shape in shapes: # label = shape['label'] # if label != 'person': # continue # # annotation = self._annotation(shape) # self.annotations.append(annotation) # self.ann_id += 1 # self.img_id += 1 instance = {} instance['info'] = 'spytensor created' instance['license'] = ['license'] instance['images'] = self.images instance['annotations'] = self.annotations instance['categories'] = self.categories return instance # 构建类别 def _init_categories(self): for k, v in classname_to_id.items(): category = {} category['id'] = v category['name'] = k self.categories.append(category) # 构建COCO的image字段 def _image(self, obj, jsonPath): image = {} # img_x = utils.img_b64_to_arr(obj['imageData']) # h, w = img_x.shape[:-1] jpgPath = jsonPath.replace('.json', '.jpg') jpgData = cv2.imread(jpgPath) h, w, _ = jpgData.shape image['height'] = h image['width'] = w image['id'] = self.img_id # image['file_name'] = os.path.basename(jsonPath).replace(".json", ".jpg") image['file_name'] = jpgPath.split(self.splitDir)[-1].replace('\\', '/') return image # 构建COCO的annotation字段 def _annotation(self, bbox, keyPoints, keyNum): annotation = {} annotation['id'] = self.ann_id annotation['image_id'] = self.img_id annotation['category_id'] = 1 # annotation['segmentation'] = [np.asarray(points).flatten().tolist()] annotation['segmentation'] = [] annotation['bbox'] = bbox annotation['iscrowd'] = 0 annotation['area'] = bbox[2] * bbox[3] annotation['keypoints'] = keyPoints annotation['num_keypoints'] = keyNum return annotation # 读取json文件,返回一个json对象 def read_jsonfile(self, path): with open(path, "r", encoding='utf-8') as f: return json.load(f) # COCO的格式: [x1,y1,w,h] 对应COCO的bbox格式 def _get_box(self, points): min_x = min_y = np.inf max_x = max_y = 0 for x, y in points: min_x = min(min_x, x) min_y = min(min_y, y) max_x = max(max_x, x) max_y = max(max_y, y) return [min_x, min_y, max_x - min_x, max_y - min_y] if __name__ == '__main__': labelme_path = r"G:\XRW\Data\selfjson" print(labelme_path) jsonName = labelme_path.split('\\')[-1] saved_coco_path = r"G:\XRW\Data\mycoco" print(saved_coco_path) ##################################### # 这个一定要注意 # 为了方便合入coco数据, 定义截断文件的文件夹与文件名字 splitDirFlag = 'labelMePoint\\' ###################################### # 创建文件 if not os.path.exists("%s/annotations/"%saved_coco_path): os.makedirs("%s/annotations/"%saved_coco_path) json_list_path = glob.glob(os.path.join(labelme_path, '*.json')) train_path, val_path = json_list_path, '' # print(train_path) print("train_n:", len(train_path), 'val_n:', len(val_path)) # 把训练集转化为COCO的json格式 l2c_train = Lableme2CoCo(splitDirFlag) # print(train_path) train_instance = l2c_train.to_coco(train_path) l2c_train.save_coco_json(train_instance, '%s/annotations/%s.json'%(saved_coco_path, jsonName))

labelme_path:自己标注的json文件路径

saved_coco_path:生成的CoCo格式保存位置

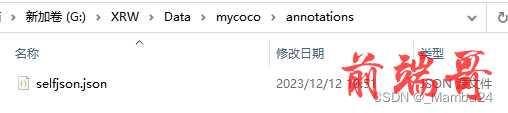

运行代码得到

2、将生成的CoCo格式的Json文件转换成Yolov8-Pose格式的txt文件

utils.py

import glob import os import shutil from pathlib import Path import numpy as np from PIL import ExifTags from tqdm import tqdm # Parameters img_formats = ['bmp', 'jpg', 'jpeg', 'png', 'tif', 'tiff', 'dng'] # acceptable image suffixes vid_formats = ['mov', 'avi', 'mp4', 'mpg', 'mpeg', 'm4v', 'wmv', 'mkv'] # acceptable video suffixes # Get orientation exif tag for orientation in ExifTags.TAGS.keys(): if ExifTags.TAGS[orientation] == 'Orientation': break def exif_size(img): # Returns exif-corrected PIL size s = img.size # (width, height) try: rotation = dict(img._getexif().items())[orientation] if rotation in [6, 8]: # rotation 270 s = (s[1], s[0]) except: pass return s def split_rows_simple(file='../data/sm4/out.txt'): # from utils import *; split_rows_simple() # splits one textfile into 3 smaller ones based upon train, test, val ratios with open(file) as f: lines = f.readlines() s = Path(file).suffix lines = sorted(list(filter(lambda x: len(x) > 0, lines))) i, j, k = split_indices(lines, train=0.9, test=0.1, validate=0.0) for k, v in {'train': i, 'test': j, 'val': k}.items(): # key, value pairs if v.any(): new_file = file.replace(s, f'_{k}{s}') with open(new_file, 'w') as f: f.writelines([lines[i] for i in v]) def split_files(out_path, file_name, prefix_path=''): # split training data file_name = list(filter(lambda x: len(x) > 0, file_name)) file_name = sorted(file_name) i, j, k = split_indices(file_name, train=0.9, test=0.1, validate=0.0) datasets = {'train': i, 'test': j, 'val': k} for key, item in datasets.items(): if item.any(): with open(f'{out_path}_{key}.txt', 'a') as file: for i in item: file.write('%s%s\n' % (prefix_path, file_name[i])) def split_indices(x, train=0.9, test=0.1, validate=0.0, shuffle=True): # split training data n = len(x) v = np.arange(n) if shuffle: np.random.shuffle(v) i = round(n * train) # train j = round(n * test) + i # test k = round(n * validate) + j # validate return v[:i], v[i:j], v[j:k] # return indices def make_dirs(dir='new_dir/'): # Create folders dir = Path(dir) if dir.exists(): shutil.rmtree(dir) # delete dir for p in dir, dir / 'labels', dir / 'images': p.mkdir(parents=True, exist_ok=True) # make dir return dir def write_data_data(fname='data.data', nc=80): # write darknet *.data file lines = ['classes = %g\n' % nc, 'train =../out/data_train.txt\n', 'valid =../out/data_test.txt\n', 'names =../out/data.names\n', 'backup = backup/\n', 'eval = coco\n'] with open(fname, 'a') as f: f.writelines(lines) def image_folder2file(folder='images/'): # from utils import *; image_folder2file() # write a txt file listing all imaged in folder s = glob.glob(f'{folder}*.*') with open(f'{folder[:-1]}.txt', 'w') as file: for l in s: file.write(l + '\n') # write image list def add_coco_background(path='../data/sm4/', n=1000): # from utils import *; add_coco_background() # add coco background to sm4 in outb.txt p = f'{path}background' if os.path.exists(p): shutil.rmtree(p) # delete output folder os.makedirs(p) # make new output folder # copy images for image in glob.glob('../coco/images/train2014/*.*')[:n]: os.system(f'cp {image} {p}') # add to outb.txt and make train, test.txt files f = f'{path}out.txt' fb = f'{path}outb.txt' os.system(f'cp {f} {fb}') with open(fb, 'a') as file: file.writelines(i + '\n' for i in glob.glob(f'{p}/*.*')) split_rows_simple(file=fb) def create_single_class_dataset(path='../data/sm3'): # from utils import *; create_single_class_dataset('../data/sm3/') # creates a single-class version of an existing dataset os.system(f'mkdir {path}_1cls') def flatten_recursive_folders(path='../../Downloads/data/sm4/'): # from utils import *; flatten_recursive_folders() # flattens nested folders in path/images and path/JSON into single folders idir, jdir = f'{path}images/', f'{path}json/' nidir, njdir = Path(f'{path}images_flat/'), Path(f'{path}json_flat/') n = 0 # Create output folders for p in [nidir, njdir]: if os.path.exists(p): shutil.rmtree(p) # delete output folder os.makedirs(p) # make new output folder for parent, dirs, files in os.walk(idir): for f in tqdm(files, desc=parent): f = Path(f) stem, suffix = f.stem, f.suffix if suffix.lower()[1:] in img_formats: n += 1 stem_new = '%g_' % n + stem image_new = nidir / (stem_new + suffix) # converts all formats to *.jpg json_new = njdir / f'{stem_new}.json' image = parent / f json = Path(parent.replace('images', 'json')) / str(f).replace(suffix, '.json') os.system("cp '%s' '%s'" % (json, json_new)) os.system("cp '%s' '%s'" % (image, image_new)) # cv2.imwrite(str(image_new), cv2.imread(str(image))) print('Flattening complete: %g jsons and images' % n) def coco91_to_coco80_class(): # converts 80-index (val2014) to 91-index (paper) # https://tech.amikelive.com/node-718/what-object-categories-labels-are-in-coco-dataset/ x = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, None, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, None, 24, 25, None, None, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, None, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, None, 60, None, None, 61, None, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, None, 73, 74, 75, 76, 77, 78, 79, None] return x

slefjson2posetxt.py复制

import json import cv2 import pandas as pd from PIL import Image from collections import defaultdict from utils import * def convert_coco_json(cocojsonpath, savepath,use_keypoints=False, cls91to80=True): """Converts COCO dataset annotations to a format suitable for training YOLOv5 models. Args: labels_dir (str, optional): Path to directory containing COCO dataset annotation files. use_segments (bool, optional): Whether to include segmentation masks in the output. use_keypoints (bool, optional): Whether to include keypoint annotations in the output. cls91to80 (bool, optional): Whether to map 91 COCO class IDs to the corresponding 80 COCO class IDs. Raises: FileNotFoundError: If the labels_dir path does not exist. Example Usage: convert_coco(labels_dir='../coco/annotations/', use_segments=True, use_keypoints=True, cls91to80=True) Output: Generates output files in the specified output directory. """ # save_dir = make_dirs('yolo_labels') # output directory save_dir = make_dirs(savepath) # output directory coco80 = coco91_to_coco80_class() # Import json for json_file in sorted(Path(cocojsonpath).resolve().glob('*.json')): fn = Path(save_dir) / 'labels' / json_file.stem.replace('instances_', '') # folder name fn.mkdir(parents=True, exist_ok=True) with open(json_file) as f: data = json.load(f) # Create image dict images = {f'{x["id"]:d}': x for x in data['images']} # Create image-annotations dict imgToAnns = defaultdict(list) for ann in data['annotations']: imgToAnns[ann['image_id']].append(ann) # Write labels file for img_id, anns in tqdm(imgToAnns.items(), desc=f'Annotations {json_file}'): img = images[f'{img_id:d}'] h, w, f = img['height'], img['width'], img['file_name'] bboxes = [] segments = [] keypoints = [] for ann in anns: if ann['iscrowd']: continue # The COCO box format is [top left x, top left y, width, height] box = np.array(ann['bbox'], dtype=np.float64) box[:2] += box[2:] / 2 # xy top-left corner to center box[[0, 2]] /= w # normalize x box[[1, 3]] /= h # normalize y if box[2] <= 0 or box[3] <= 0: # if w <= 0 and h <= 0 continue cls = coco80[ann['category_id'] - 1] if cls91to80 else ann['category_id'] - 1 # class box = [cls] + box.tolist() if box not in bboxes: bboxes.append(box) if use_keypoints and ann.get('keypoints') is not None: k = (np.array(ann['keypoints']).reshape(-1, 3) / np.array([w, h, 1])).reshape(-1).tolist() k = box + k keypoints.append(k) # Write fname = f.split('/')[-1] # with open((fn / f).with_suffix('.txt'), 'a') as file: with open((fn / fname).with_suffix('.txt'), 'a') as file: for i in range(len(bboxes)): if use_keypoints: line = *(keypoints[i]), # cls, box, keypoints file.write(('%g ' * len(line)).rstrip() % line + '\n') if __name__ == '__main__': source = 'COCO' cocojsonpath = r'G:\XRW\Data\mycoco\annotations' savepath = r'G:\XRW\Data\myposedata' if source == 'COCO': convert_coco_json(cocojsonpath, # directory with *.json savepath, use_keypoints=True, cls91to80=True)

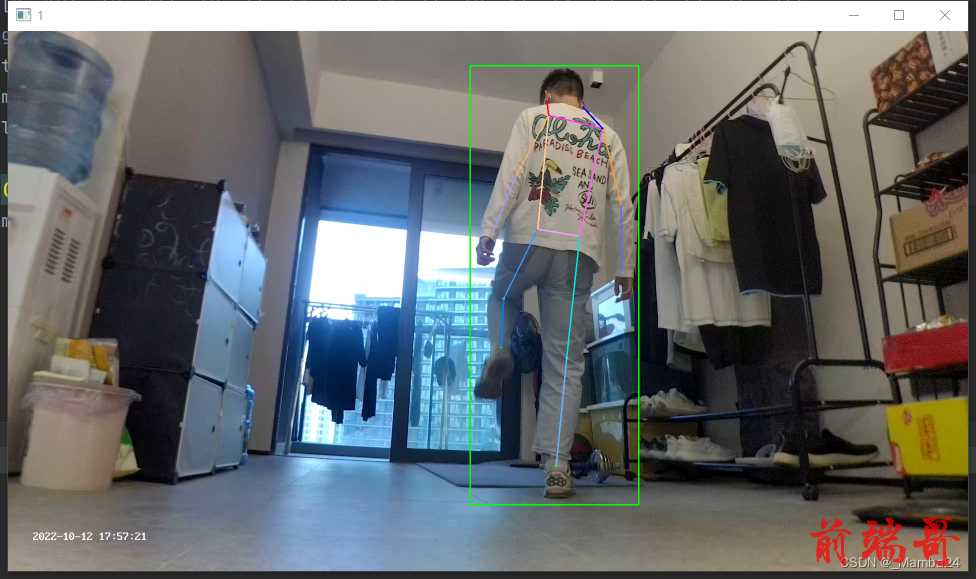

运行代码得到:

<class-index>是对象的类的索引,<x> <y> <width> <height>是边界框的坐标,<px1> <py1> <px2> <py2> ... <pxn> <pyn>是关键点的像素坐标。坐标由空格分隔。

3、检查生成的txt是否准确

PoseVisualization.py:将txt的信息可视化在图片上进行验证。复制

import cv2 imgpath = r'G:\XRW\Data\selfjson\five_22101205_000930.jpg' txtpath = r'G:\XRW\Data\myposedata\labels\selfjson\five_22101205_000930.txt' f = open(txtpath,'r') lines = f.readlines() img = cv2.imread(imgpath) h, w, c = img.shape colors = [[255, 128, 0], [255, 153, 51], [255, 178, 102], [230, 230, 0], [255, 153, 255], [153, 204, 255], [255, 102, 255], [255, 51, 255], [102, 178, 255], [51, 153, 255], [255, 153, 153], [255, 102, 102], [255, 51, 51], [153, 255, 153], [102, 255, 102], [51, 255, 51], [0, 255, 0], [0, 0, 255], [255, 0, 0], [255, 255, 255]] for line in lines: print(line) l = line.split(' ') print(len(l)) cx = float(l[1]) * w cy = float(l[2]) * h weight = float(l[3]) * w height = float(l[4]) * h xmin = cx - weight/2 ymin = cy - height/2 xmax = cx + weight/2 ymax = cy + height/2 print((xmin,ymin),(xmax,ymax)) cv2.rectangle(img,(int(xmin),int(ymin)),(int(xmax),int(ymax)),(0,255,0),2) kpts = [] for i in range(17): x = float(l[5:][3*i]) * w y = float(l[5:][3*i+1]) * h s = int(l[5:][3*i+2]) print(x,y,s) if s != 0: cv2.circle(img,(int(x),int(y)),1,colors[i],2) kpts.append([int(x),int(y),int(s)]) print(kpts) kpt_line = [[16, 14], [14, 12], [17, 15], [15, 13], [12, 13], [6, 12], [7, 13], [6, 7], [6, 8], [7, 9], [8, 10], [9, 11], [2, 3], [1, 2], [1, 3], [2, 4], [3, 5], [4, 6], [5, 7]] for j in range(len(kpt_line)): m,n = kpt_line[j][0],kpt_line[j][1] if kpts[m-1][2] !=0 and kpts[n-1][2] !=0: cv2.line(img,(kpts[m-1][0],kpts[m-1][1]),(kpts[n-1][0],kpts[n-1][1]),colors[j],2) img = cv2.resize(img, None, fx=0.5, fy=0.5) cv2.imshow('1',img) cv2.waitKey(0)

这样就将自己的Json格式转成训练Yolov8-Pose姿态的txt格式了。

4、将图片copy到对应路径中

以上步骤完成后只生成了txt,需要再将对应的图片copy到对应路径中。

pickImg.py

import glob import os import shutil imgpath = r'G:\XRW\Data\selfjson' txtpath = r'G:\XRW\Data\myposedata\labels\selfjson' savepath = r'G:\XRW\Data\myposedata\images\selfjson' os.makedirs(savepath,exist_ok=True) imglist = glob.glob(os.path.join(imgpath ,'*.jpg')) # print(imglist) txtlist = glob.glob(os.path.join(txtpath ,'*.txt')) # print(txtlist) for img in imglist: name = txtpath + '\\' +img.split('\\')[-1].split('.')[0 ] +'.txt' print(name) if name in txtlist: shutil.copy(img ,savepath)

- imgpath CoCo数据集图片路径

- txtpath 生成的txt路径

- savepath 保存图片的路径

这样就将自己标注的数据集转换成Yolov8-Pose格式的txt了。